Deep learning is now one of the fastest growing and most exciting areas of machine learning. Many outstanding papers have been published and many high-quality open source deep learning frameworks are available. However, the paper is usually very concise and assumes that the reader has a considerable understanding of deep learning, which makes beginners often stuck in the understanding of some concepts, reading the paper seems to understand, very difficult. On the other hand, even with an easy-to-use deep learning framework, if you don't understand the common concepts and basic ideas of deep learning, you don't know how to design, diagnose, and debug the network when faced with real-world tasks.

This series of articles aims to intuitively and systematically sort out the common concepts and basic ideas in deep learning, so that readers have an intuitive understanding of the important concepts and ideas of deep learning, so as to "know the truth and know why", thus reducing follow-up understanding. The difficulty of the paper and practical application. This series of articles attempts to describe in a concise language, avoiding mathematical formulas and complicated details. This article is the third in a series of articles designed to introduce the application of deep learning in other tasks of computer vision.

Network compression

Despite the superior performance of deep neural networks, the huge computational and storage overhead is a challenge for their deployment in real-world applications. Studies have shown that there are a large amount of redundancy in the parameters of the neural network. Therefore, there is a lot of work dedicated to reducing network complexity while ensuring accuracy.

Low Rank Approximation The original weight matrix is ​​approximated by a low rank matrix. For example, the SVD can be used to obtain the optimal low rank approximation of the original matrix, or the Toeplitz matrix can be used to fit the Krylov decomposition to approximate the original matrix.

Pruning After the training is over, some unimportant neurons can be connected (the weight value can be measured by the sparse constraint in the loss function) or the entire filter can be removed, followed by several rounds of fine-tuning. In actual operation, the pruning of the neuron connection level will make the result sparse, which is not conducive to cache optimization and memory access, and some need to specially design the supporting runtime. In contrast, filter-level pruning can run directly under an existing runtime, and the key to filter-level pruning is how to measure the importance of the filter. For example, the degree of sparseness of the convolution result, the effect of the filter on the loss function, or the effect of the convolution result on the next layer of results can be measured.

Quantization Clusters the weight values, replacing the original weight values ​​with cluster center values, and Huffman coding, which may include scalar quantization or product quantization. However, if only the weight itself is considered, it is easy to cause the quantization error to be low, but the classification error is high. Therefore, the Quantized CNN optimization goal is to minimize the reconstruction error. In addition, hashing can be used, that is, weights mapped into the same hash bucket share the same parameter value.

Decrease the range of data values ​​By default, data is a single-precision floating-point number, which is 32 bits. Studies have found that switching to half-precision floating-point numbers (16 bits) hardly affects performance. Google TPU uses 8-bit integers to represent data. The extreme case is that the value range is binary or tri-valued (0/1 or -1/0/1), so that all calculations can be done quickly using only bit operations, but how to train a binary or tertiary network is a key . The usual practice is that the network feedforward process is binary or triple, and the gradient update process is a real value.

In addition, some studies have concluded that binary representations have limited representation capabilities, so they use an additional floating point number to scale the results of binary convolution to improve network representation.

Streamlined structure design There is a research work to directly design a streamlined network structure. E.g,

Bottleneck structure and 1 × 1 convolution. This design concept has been widely used in the design of Inception and ResNet series networks.

Packet convolution.

Expand the convolution. Use the expansion convolution to expand the receptive field while keeping the parameter amount constant.

Knowledge distillation Training small networks to approximate large networks, but how to approach large networks is still inconclusive.

Hardware and software co-design Common hardware includes two categories: (1). General hardware, including CPU (low latency, good at serial, complex operations) and GPU (high throughput, good at parallel, simple operations). (2). Dedicated hardware, including ASIC (fixed logic devices such as Google TPU) and FPGA (programmable logic devices, flexible, but not as efficient as ASIC).

Fine-grained image classification

Compared to (general) image classification, fine-grained image classification requires more refined image categories. For example, we need to determine which kind of bird, which car, or which type of aircraft the target is. Usually, the differences between these subclasses are very small. For example, the difference between the appearance of the Boeing 737-300 and the Boeing 737-400 is only the number of windows. Therefore, fine-grained image classification is a more challenging task than (universal) image classification.

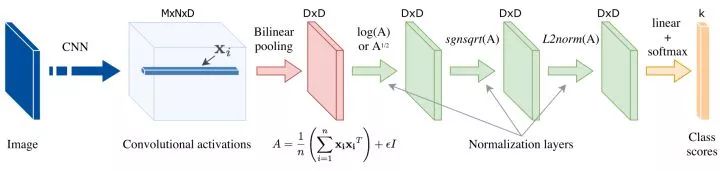

The classic approach to fine-grained image classification is to first locate different parts of the target, such as the bird's head, feet, wings, etc., then extract features from these parts, and finally combine these features for classification. The accuracy of such methods is high, but this requires manual labeling of the dataset. A major research trend of fine-grained classification is to use only image markers for learning without additional monitoring information, which is represented by a bilinear CNN-based method.

Bilinear CNN (bilinear CNN) examines the interaction between different dimensions by computing the outer product of the convolution description vector. Since different dimensions of the description vector correspond to different channels of the convolution feature, and different channels extract different semantic features, the relationship between different semantic features of the input image can be simultaneously captured by the bilinear operation.

Streamlined bilinear convergence The results of bilinear convergence are very high dimensional, which consumes a lot of computational and storage resources, while greatly increasing the number of parameters for subsequent fully connected layers. Many follow-up studies aim to design a more streamlined bilinear convergence strategy that broadly includes the following three broad categories:

(1) PCA dimensionality reduction. Before the bilinear convergence, the depth description vector is subjected to PCA projection dimensionality reduction, but this will make the dimensions no longer relevant, which in turn affects performance. A compromise is to reduce the size of only one PCA.

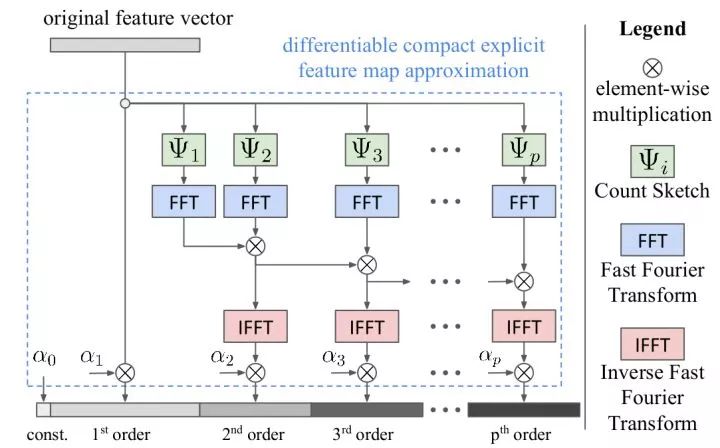

(2) Approximate nuclear estimation. It can be shown that using a linear SVM classification after bilinear converging results is equivalent to using a polynomial kernel between the description vectors. Since the mapping of the two vector outer products is equal to the two vectors separately mapped and then convolved, there is research work using a random matrix approximation vector mapping. In addition, by approximating the kernel estimate, we can capture more than second-order information (as shown below).

(3) Low rank approximation. The low-rank approximation is performed on the parameter matrix of the fully connected layer that is subsequently used for classification, so that we do not need to explicitly calculate the bilinear convergence result.

"image captioning"

"Looking at a picture" is intended to produce a textual description of an image in one or two sentences. This is a cross-cutting task in both visual and natural language processing.

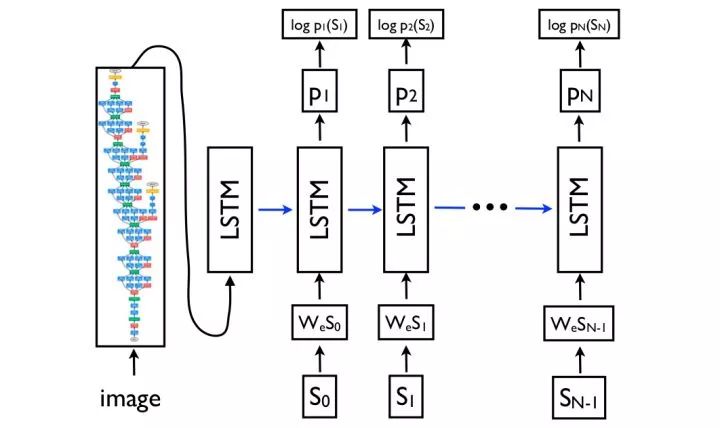

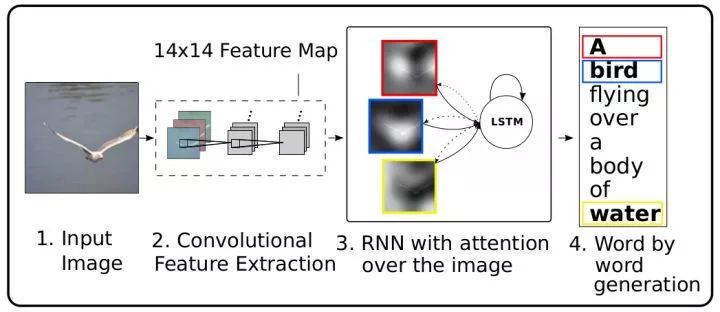

Encoder-decoder networks The basic idea of ​​graph-based speech network design, which is borrowed from machine translation ideas in natural language processing. The source language encoding network in the machine translation is replaced with the CNN encoding network of the image to extract features of the image, and then the target language decoding network is used to generate the text description.

Show, attend, and tell Attention mechanism is a common technique used in machine translation to capture long-distance dependencies. It can also be used to view pictures. In the decoding network, in addition to predicting the next word, each time, a two-dimensional attention map is needed to perform weighted convergence on the deep convolution features. An additional benefit of using the attention mechanism is that the network can be visualized to see which parts of the image the network notices as each word is generated.

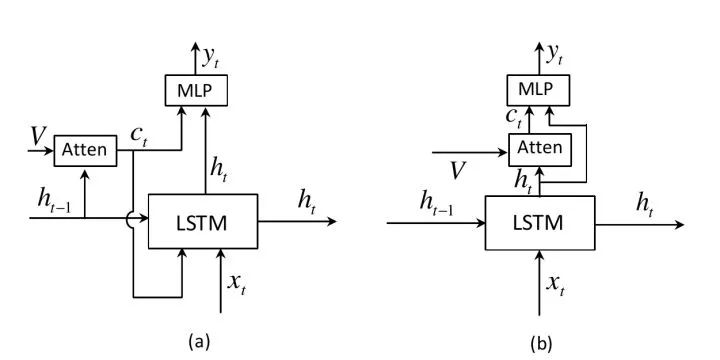

The attention mechanism of Adaptive attention generates a two-dimensional attention map for each word to be predicted (Fig. (a)), but for words like the, of, it does not actually need to use clues from the image, and some Words can be inferred from the above and do not require image information. This work extends the LSTM to propose a "visual sentinel" mechanism to determine whether the above language information should be more focused on the current word or more on the image information (Figure (b)). In addition, unlike previous work, which uses the hidden layer state calculation attention map at the previous moment, the work uses the current hidden layer state.

Visual question answering

Given an image and a textual question about the content of the image, the visual question and answer is designed to select the correct answer from a number of candidate text responses. The essence is the classification task, and some work is to use RNN decoding to generate text answers. Visual Q&A is also a cross-cutting task in both visual and natural language processing.

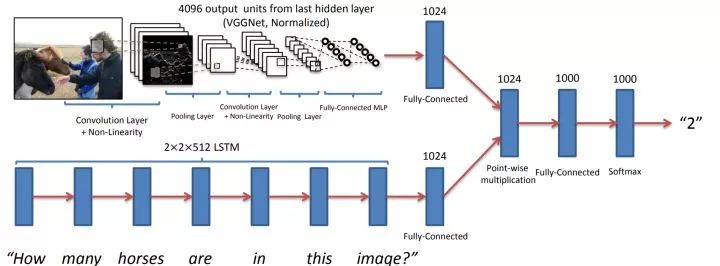

Basic idea Use CNN to extract image features from images, use RNN to extract text features from text problems, then try to fuse visual and text features, and finally classify them through fully connected layers. The key to this task is how to fuse the features of these two modalities. A direct fusion scheme is to combine visual and text features into a vector, or to add or multiply visual and text feature vectors by element.

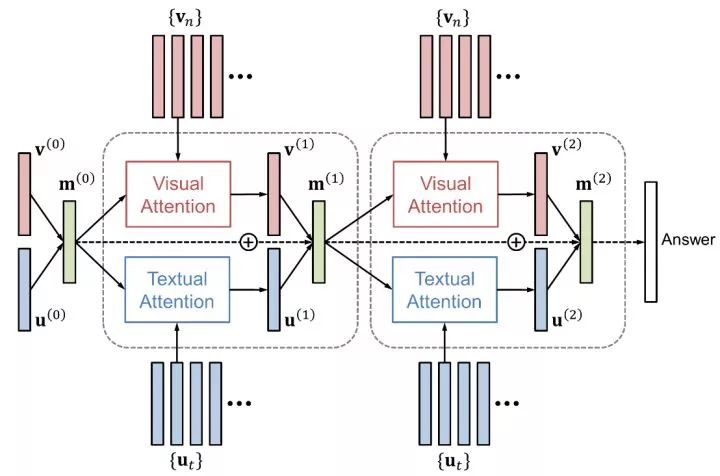

Attention mechanism Similar to “talking to the pictureâ€, using the attention mechanism will also improve the performance of the visual question and answer. Attention mechanisms include both visual attention ("where to look") and text attention ("which word to focus on"). HieCoAtten produces visual and text attention at the same time or alternately. DAN maps the visual and text attentional results to the same space, and at the same time produces the next visual and textual attention.

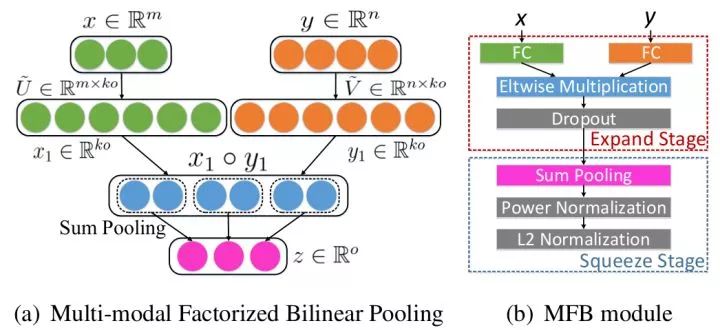

Bilinear Fusion The outer product of the visual feature vector and the text feature vector captures the interaction between the dimensions of the two modal features. In order to avoid explicit calculation of high-dimensional bilinear convergent results, the idea of ​​reduced bilinear convergence in fine-grained recognition can also be used for visual quiz. For example, the MFB uses a low rank approximation and uses both visual and text attention mechanisms.

Network visualization and network understanding

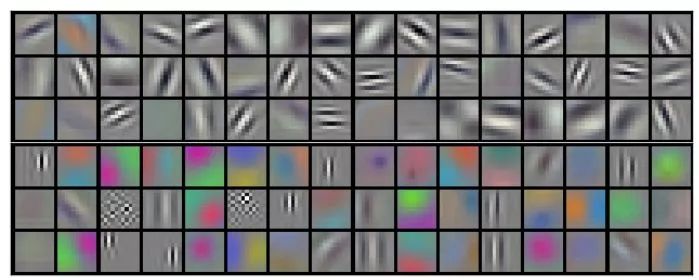

These methods are intended to provide some visual means to understand deep convolutional neural networks. Direct visualization of the first layer filter Since the filter of the first layer of convolutional layer slides directly in the input image, we can directly visualize the first layer of the filter. It can be seen that the first layer of weight focuses on the edges of a particular orientation as well as a particular color combination. This is consistent with the visual mechanism of the creature. However, since the high-level filter does not directly act on the input image, direct visualization is only valid for the first layer filter.

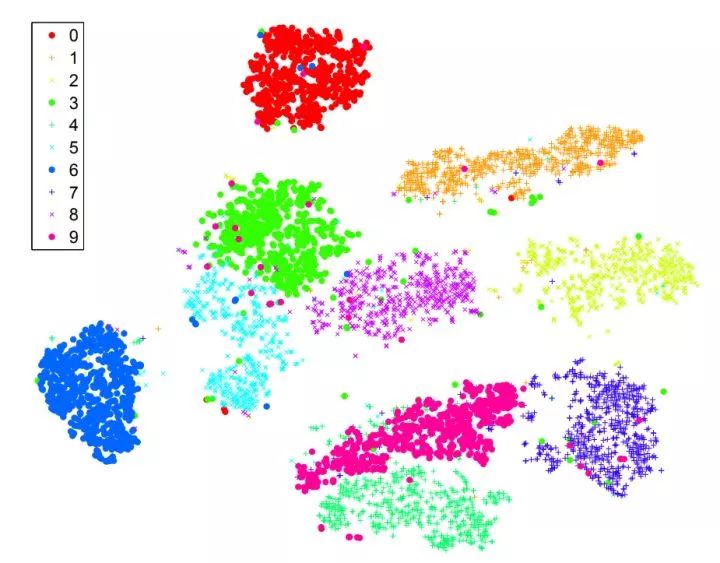

t-SNE performs low-dimensional embedding of the image's fc7 or pool5 features, such as dimensionality reduction to 2 dimensions so that it can be drawn in a two-dimensional plane. Images with similar semantic information should be similar in distance in the t-SNE results. Unlike PCA, t-SNE is a nonlinear dimensionality reduction method that preserves the distance between parts. The figure below is the result of t-SNE directly on the MNIST original image. It can be seen that MNIST is an easy data set, and image clustering belonging to different categories is very obvious.

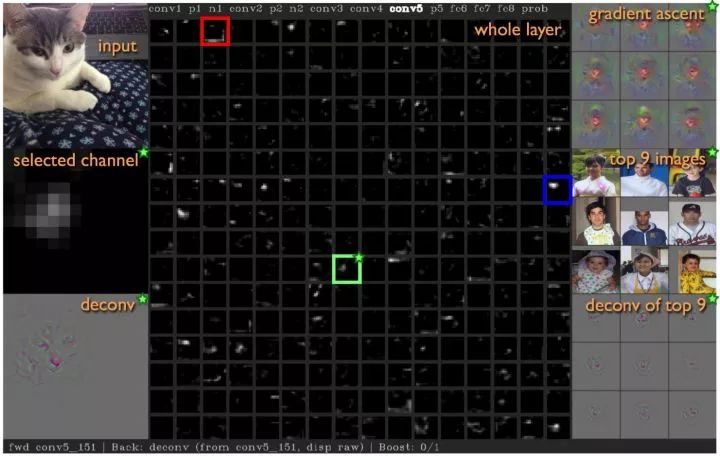

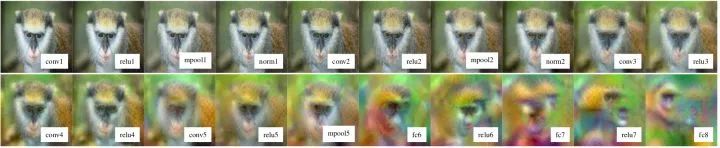

Visualize the middle layer activation value Draw the response of different feature maps for a specific input image. It has been observed that even if there are no face or text related categories in ImageNet, the network will learn to recognize these semantic information to assist in subsequent classification.

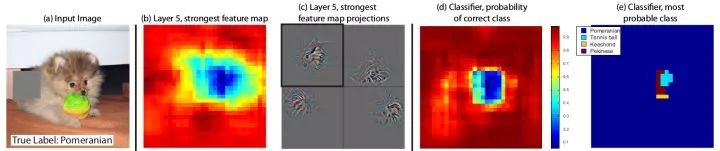

Maximum Response Image Region Select a particular intermediate layer neuron, enter a number of different images into the network, and find the region of the image that maximizes the response of the neuron to see which semantic features the neuron is used to respond to. The reason for the "image area" rather than the "complete image" is that the receptive field of the middle layer neurons is limited and does not cover all the images.

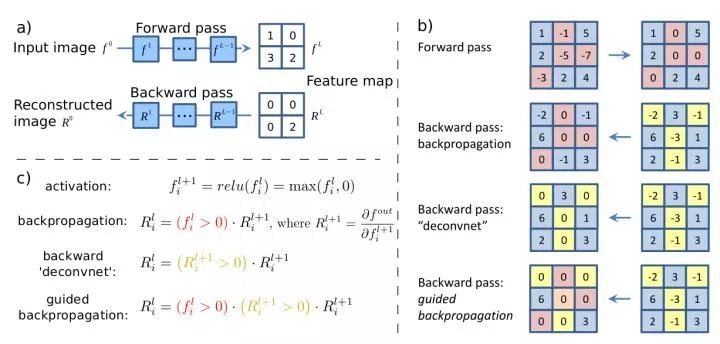

Input saliency map Calculates the partial derivative of a particular neuron to the input image for a given input image. It expresses the effect of different pixels of the input image on the response of the neuron, ie, the change in the response of different pixels of the input image will result in a change in the response value of the neuron. Guided backprop only propagates positive gradient values ​​backwards, that is, only focuses on the positive effects of neurons, which produces better visualization than standard backpropagation.

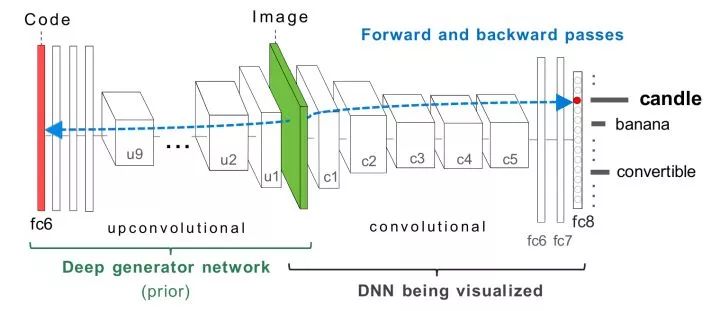

Gradient Rise Optimization Select a particular neuron, calculate the partial derivative of a particular neuron on the input image, and optimize the input image using gradient ascent until convergence. In addition, we need some regularizations to make the resulting image closer to the natural image. In addition, in addition to optimization on the input image, we can also optimize the fc6 feature and generate the desired image from it.

DeepVisToolbox This toolkit provides the above four visualization results.

The occlusion experiment blocks a different area of ​​the image with a gray square, and then feeds the network to observe its effect on the output. The area that has the greatest impact on the output is the area that is most important for judging the category. As can be seen from the figure below, blocking the dog's face has the greatest impact on the results.

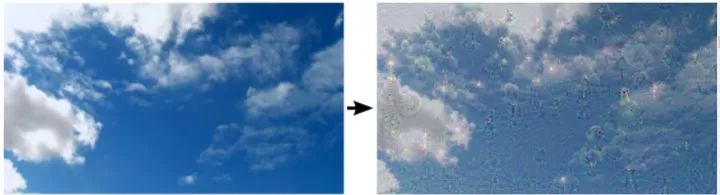

Deep dream selects an image and a specific layer. The optimization goal is to maximize the square of the activation value of the layer by increasing the gradient of the image. In fact, this is a semantic feature captured by positive feedback of this layer of neurons. It can be seen that there are many dog ​​patterns in the generated image, because there are 200 classes in the ImageNet Dataset 1000 category about dogs, so many neurons in the neural network are dedicated to identifying dogs in the image.

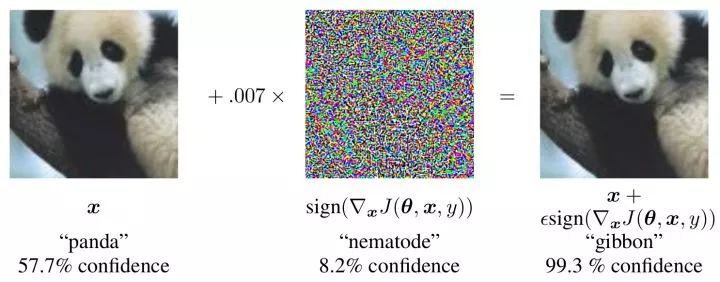

Adversarial examples Select an image and a category that is not its true mark, calculate the partial derivative of the input image for that category, and optimize the gradient for the image. Experiments have found that after making subtle changes to the image, the network can be considered with considerable confidence that the image belongs to the wrong category. In practical applications, confrontational samples will pose threats to areas such as finance and security. Some researchers believe that this is because the image space is very high dimensional, even if there is a lot of training data, it can only cover a small part of the space. As long as the input deviates slightly from the manifold space, it is difficult for the network to get a normal judgment.

Texture synthesis and style transform

Given a small image containing a particular texture, texture synthesis is intended to produce a larger image containing the same texture. Given a normal image and an image containing a particular drawing style, the style migration is designed to preserve the original style while migrating the given style to the image.

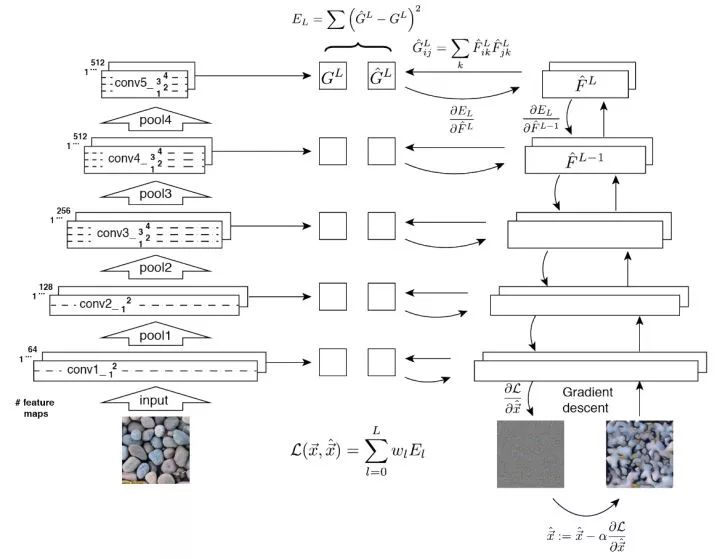

Feature inversion The basic idea of ​​these two types of problems. Given an intermediate layer feature, we hope to produce an image with features and a given feature through iterative optimization. In addition, feature reverse engineering can also tell us how much information is contained in the middle layer features. It can be seen that there is almost no loss of image information in the features of the lower layers, and the high-level, especially the fully-connected features, lose most of the detail information. On the other hand, high-level features are less sensitive to image color and texture changes.

Gram matrix Given the depth convolution feature of D × H × W, we convert it to a matrix X of D × (HW), then the corresponding Gram matrix of the layer feature is defined as  Through the outer product, the Gram matrix captures the co-occurrence relationship between different features.

Through the outer product, the Gram matrix captures the co-occurrence relationship between different features.

Basic idea of ​​texture generation The feature reverse engineering of the Gram matrix of a given texture pattern. The Gram matrix of each layer feature of the generated image is brought close to each layer Gram of a given texture image. Low-level features tend to capture detailed information, while high-level features can capture larger areas of features.

The basic idea of ​​style migration The optimization goal consists of two items, which make the content of the generated image close to the original image content, and make the generated image style close to the given style. The style is represented by the Gram matrix, and the content is directly reflected by the neuron activation value.

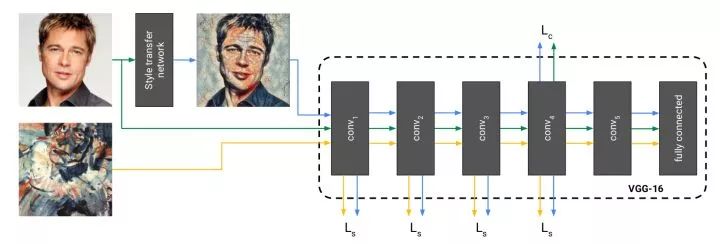

Direct generation of style-migrated images The disadvantage of the above method is that it requires multiple iterations to converge. The solution proposed by this work is to train a neural network to directly generate images of style migration. Once the training is over, the style migration is only required to feed the network once, which is very efficient. During training, the generated image, the original image, and the genre image are fed forward to a fixed network to extract different layer features for calculating the loss function.

The example normalization and batch normalization work on one batch, and the average and variance of the example normalization are determined only by the image itself. Experiments have found that using example normalization in a style migration network can remove and sample relevant contrast information from the image to simplify the generation process.

Conditional instance normalization One problem with the above method is that we need to train a model separately for each different style. Due to the commonality between different styles, the work is designed to allow the network to share parameters corresponding to different styles of style migration. Specifically, it modifies the example normalization in the style migration network to have N sets of scaling and translation parameters, each group corresponding to a different style. In this way, we can get N styles of migration images simultaneously through a feedforward process.

Face verification/recognition

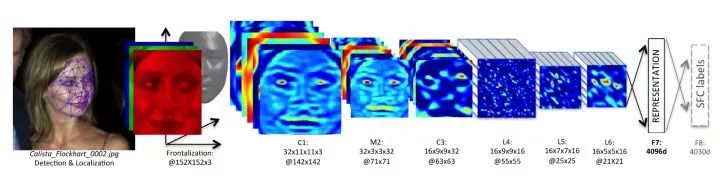

Face verification/recognition can be considered as a more elaborate fine-grained image recognition task. Face verification is to give two images to determine whether they belong to the same person, and face recognition is to answer who the person in the image is. A face verification/recognition system typically includes three major steps: detecting a face in an image, locating a feature point, and verifying/identifying a face. The challenge with face verification/recognition is that small sample learning is required. Usually, there is only one corresponding image per person in the data set, which is called one-shot learning.

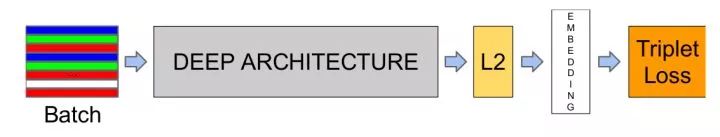

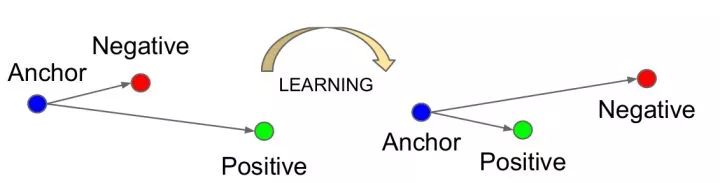

The two basic ideas are treated as classification problems (which need to face a very large number of categories) or as a measure of learning problems. If the two images belong to the same person, we want their depth features to be closer, otherwise we hope they are not close. Thereafter, verification is performed based on the distance between the depth features (a threshold is set for the feature distance to determine whether it belongs to the same person), or (k-nearest neighbor classification) is recognized.

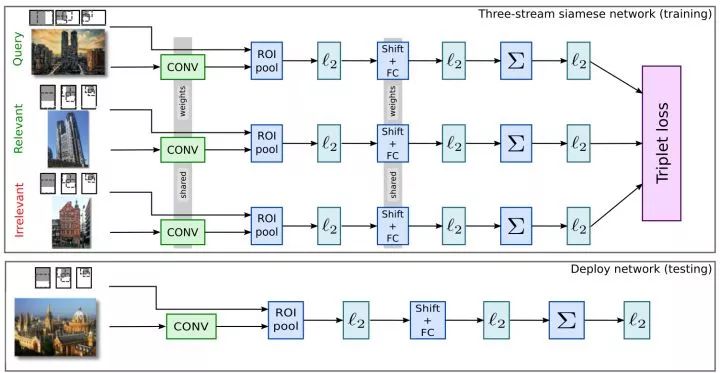

DeepFace was the first to successfully use deep neural networks for face verification/recognition models. DeepFace uses local joins with non-shared parameters. This is due to the different features of different regions of the face (for example, the eyes and mouth have different characteristics), and the "shared parameter" property of the classical convolutional layer is no longer applicable in face recognition. Therefore, local connections that do not share parameters are used in the face recognition network. It uses a siemese network for face verification. When the depth features of the two images are less than a given threshold, they are considered to be from the same person.

FaceNet ternary input, the distance between the desired and negative samples is greater than the distance between the positive samples at a certain interval (such as 0.2). In addition, the choice of input ternary is not random, otherwise the network can't learn anything because of the large difference between it and the negative sample. Choosing the most difficult triples (ie, the farthest positive sample and the nearest negative sample) will cause the network to fall into local optimum. FaceNet uses a semi-difficult strategy to select negative samples that are farther than the positive samples.

Large interval cross entropy loss A major research hotspot in recent years. Due to the large intra-class fluctuations and high similarity between classes, research work aims to improve the ability of classical cross-entropy loss to judge depth features. For example, L-Softmax enhances the optimization goal to increase the angle between the parameter vector and the depth feature of the corresponding category. A-Softmax further constrains the parameter vector length of L-Softmax to 1, which makes the training more concentrated to optimize the depth features and angles. In practice, both L-Softmax and A-Softmax are difficult to converge, and an annealing method is used during training, which is gradually annealed from standard softmax to L-Softmax or A-Softmax.

Liveness detection Determines whether a face is from a real person or from a photo, etc. This is a key issue that needs to be solved for face verification/recognition. The current mainstream practice in the industry is to use human expression changes, texture information, blinking, or let the user complete a series of actions.

Image retrieval

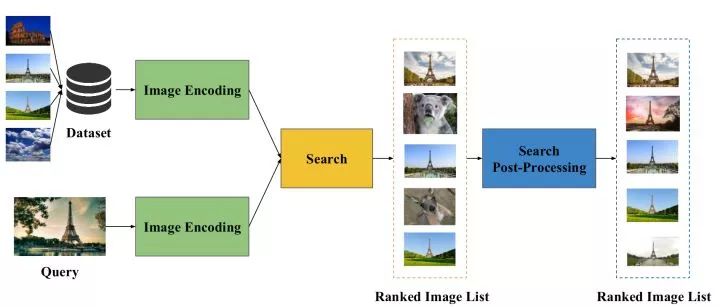

Given a query image that contains a specific instance (eg, a particular target, scene, building, etc.), the image retrieval is intended to find an image containing the same instance from the database image. However, due to the different viewing angles, illumination, or occlusion of different images, how to design an effective and efficient image retrieval algorithm that can cope with these intra-class differences is still a research problem.

Typical Process for Image Retrieval First, try to extract a representation vector of a suitable image from the image. Second, a nearest neighbor search is performed on these representation vectors using Euclidean or cosine distances to find similar images. Finally, some post-processing techniques can be used to fine tune the search results. It can be seen that the key to determining the performance of an image retrieval algorithm is the quality of the extracted image representation.

(1) Unsupervised image retrieval

Unsupervised image retrieval aims to extract image representations using only the ImageNet pre-training model as a fixed feature extractor without the aid of other supervisory information.

Intuitive Ideas Since the deep full join feature provides a high-level description of the image content and is a "natural" vector form, an intuitive idea is to directly extract the depth fully connected feature as the representation vector of the image. However, since the full-connection feature is intended to perform image classification and lacks description of image details, the retrieval accuracy of this idea is general.

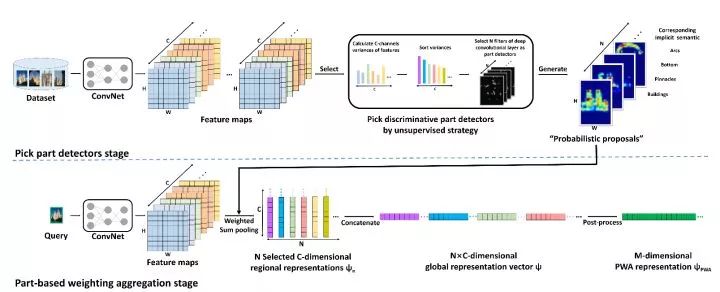

Using deep convolution features Since deep convolution features have better detail information and can handle image input of any size, the current mainstream method is to extract deep convolution features and obtain them by weighted global summation (sum-pooling). The representation vector of the image. Among them, the weight reflects the importance of different position features, and can have two forms: spatial direction weight and channel direction weight.

The CroW deep convolution feature is a distributed representation. Although the response value of a neuron is not useful for judging whether a corresponding region contains a target, if a plurality of neurons have a large response value at the same time, the region is likely to contain the target. Therefore, CroW adds the feature maps along the channel direction to obtain a two-dimensional aggregate map, and normalizes it and normalizes the result of the root number as the spatial weight. The channel weight of CroW is defined according to the sparsity of the feature graph, which is similar to the IDF feature in the TF-IDF feature in natural language processing, and is used to enhance features that are infrequent but discriminative.

Class weighted features This method attempts to combine the network's category prediction information to make spatial weights more discriminating. Specifically, the CAM is used to obtain semantic information of the most representative regions corresponding to each category in the pre-training network, and then the normalized CAM results are used as spatial weights.

PWA PWA found that the different channels of the deep convolution feature correspond to the response of different parts of the target. Therefore, PWA selects a series of discriminative feature maps, and normalizes the results as spatial weights, and cascades the results as the final image representation.

(2) Supervised image retrieval

Supervised Image Retrieval First, the ImageNet pre-training model is fine-tuned on an additional training data set, and then the image representation is extracted from the fine-tuned model. For better results, the training data set for fine tuning is usually similar to the data set to be used for retrieval. In addition, the candidate region network can be used to extract foreground regions of the image that may contain the target.

The siemese network is similar to the face recognition approach. Using binary or ternary (++-) inputs, the training model makes the distance between similar samples as small as possible, and the distance between similar samples is as small as possible. Big.

Object tracking

Target tracking is designed to track the motion of a target in a video. Usually, the position of the target in the first frame of the video is given in the form of a bounding box, and we need to predict the bounding box of the target in other frames. Target tracking is similar to target detection, but the difficulty of target tracking is that you don't know what the target is to track in advance, so you can't collect enough training data in advance to train a special detector.

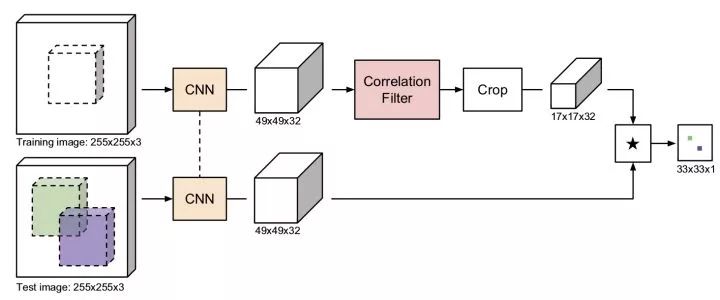

Twins Network Similar to the idea of ​​face verification, using the twin network, one input first frame encloses the image inside the box, and the other input candidate image areas of other frames, and outputs the similarity of the two images. We don't need to traverse all possible candidate regions of other frames. With a full convolutional network, we only need to feed the entire image once. A cross-correlation operation (convolution) yields a two-dimensional response map in which the maximum response position determines the bounding box position that needs to be predicted. The method based on the twin network is fast and can process images of any size.

CFNet correlation filtering trains a linear template to distinguish image regions from surrounding areas. With Fourier transform, correlation filtering is very efficient. CFNet combines off-training twin-trained network and online update related filtering modules to improve the tracking performance of lightweight networks.

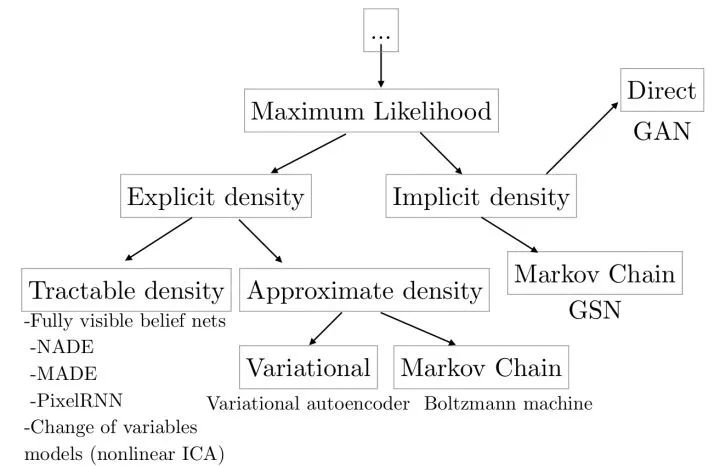

Generative models

Such models are designed to learn the distribution of data (images) or to sample new images from the distribution. The generative model can be used for super-resolution reconstruction, image coloring, image conversion, image generation from text, potential representation of learning images, semi-supervised learning, and the like. In addition, the generative model can be combined with reinforcement learning for simulation and inverse reinforcement learning.

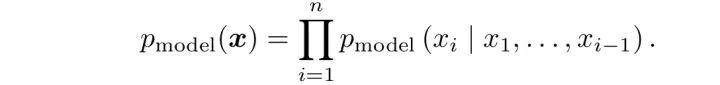

Explicit modeling Based on the conditional probability formula, the maximum likelihood estimation is directly performed to learn the distribution of the image. The disadvantage of this method is that since each pixel depends on the previous pixel, the image generation is slower because it needs to be sequentially performed from a corner. For example, WaveNet can generate speech similar to human speech, but since it cannot be generated in parallel, it takes 2 minutes to get 1 second of speech, which cannot be achieved in real time.

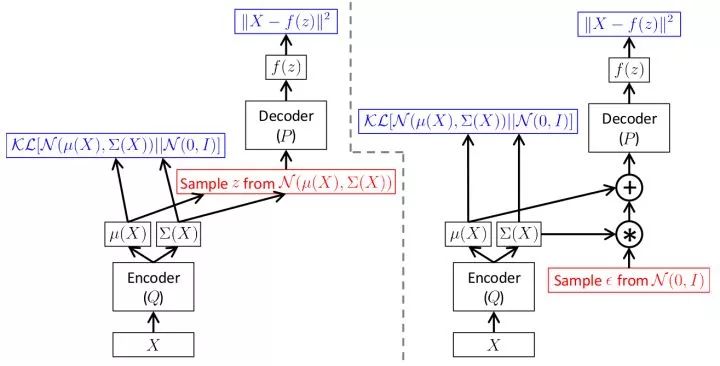

Variational auto-encoder (VAE) To avoid the drawbacks of explicit modeling, the variational self-encoder implicitly models the data distribution. It considers that the generation of the image is controlled by a hidden variable and assumes that the implicit variable obeys a diagonal Gaussian distribution. The variational self-encoder generates an image from a hidden variable through a decoding network. Since the maximum likelihood estimation cannot be directly performed, in training, similar to the EM algorithm, the variational self-encoder constructs the lower bound function of the likelihood function and optimizes the lower bound function. The advantage of the variational self-encoder is that, since the dimensions are independent, we can control the variation of the output image by controlling the hidden variables.

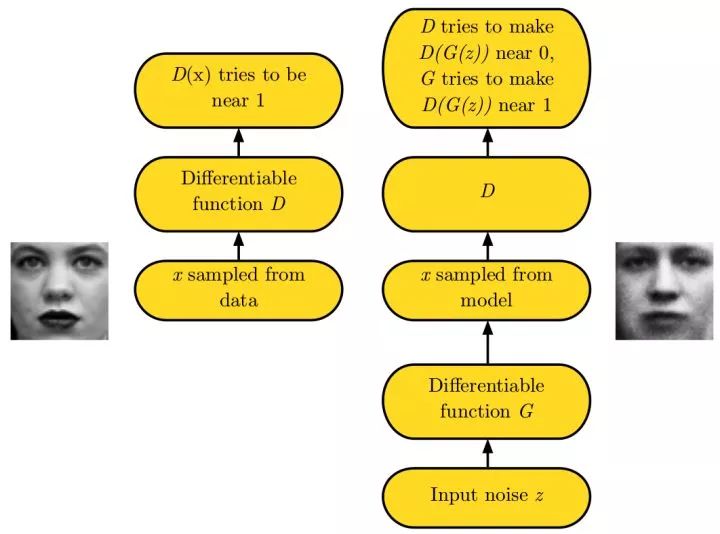

Generative adversarial networks (GAN) Because of the difficulty in learning data distribution, the production-oriented network circumvents this step and directly generates new images. The generated confrontation network uses a generation network G to generate an image from random noise, and a discrimination network D judges that its input image is a real/falsified image. At the time of training, the objective of discriminating the network D is to be able to judge the real/falsified image, and the target of generating the network G is such that the discriminating network D tends to judge that its output is a real image.

In practice, the direct training of the generated confrontation network will encounter the mode collapse problem, that is, the generated confrontation network cannot learn the complete data distribution. Subsequently, improvements in LS-GAN and W-GAN have emerged. Compared with the variational self-encoder, the details of the generated against the network are better. The following links tidy up a number of papers related to the generated confrontation network: hindupuravinash/the-gan-zoo. The following links tidy up the skills of many training-generational defense networks: soumith/ganhacks.

Video classification

Most of the tasks described above can also be used for video data. Here, only the video classification task is taken as an example to briefly introduce the basic method of processing video data.

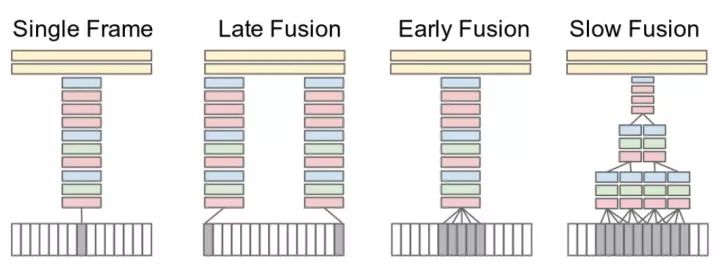

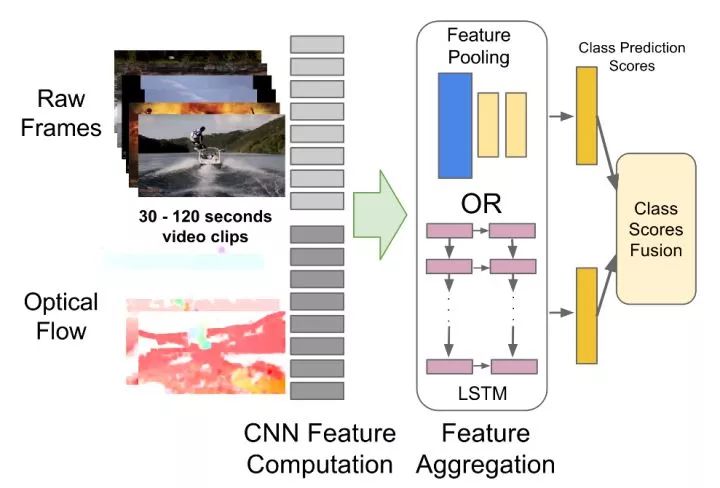

Multi-frame image feature convergence This method treats video as a combination of images in a series of frames. The network simultaneously receives several frame images (for example, 15 frames) belonging to one video segment, and extracts their depth features respectively, and then fuses the image features to obtain features of the video segment, and finally classifies them. The experiment found that using "slow fusion" works best. In addition, using a single frame image for classification can yield very competitive results, which means that a single frame image already contains a lot of information.

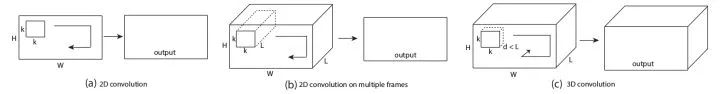

3D Convolution Extends a classical 2D convolution to a 3D convolution so that it is also locally connected in time dimension. For example, a 3x3 convolution of VGG can be expanded to a 3x3x3 convolution, and a 2x2 confluence can be expanded to a 2x2x2 confluence.

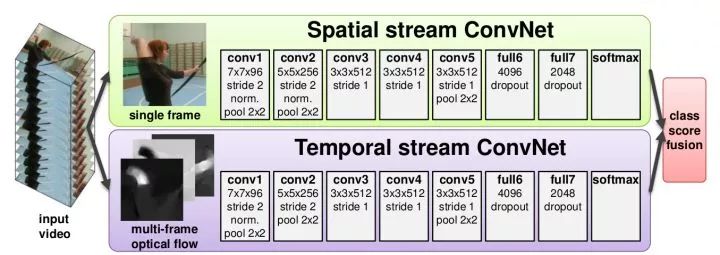

Image + Timing Two-Branch Structure This method uses two separate networks to capture image information and motion information over time, respectively. Among them, image information is obtained from a single frame of still images, which is a classic image classification problem. Motion information is obtained by optical flow, which captures the motion of the target between adjacent frames.

CNN+RNN captures long-distance dependence The previous method can only capture the dependencies between several frames of images. This method is designed to extract single-frame image features with CNN, and then use RNN to capture the dependencies between frames.

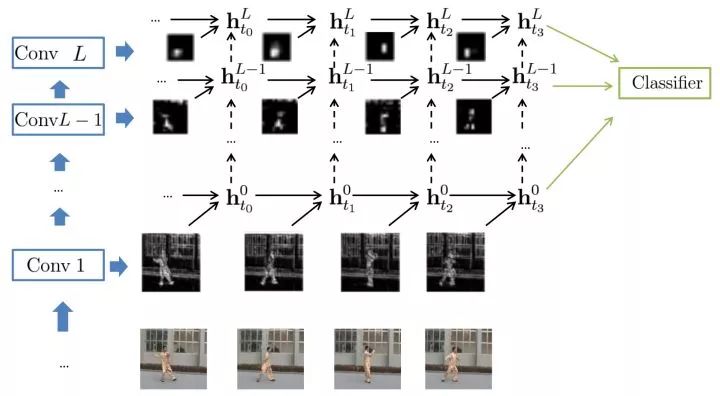

In addition, research efforts have attempted to combine CNN and RNN into one, enabling each convolutional layer to capture long-range dependencies.

Special thanks to the author | Zhang Wei (Nanjing University)

references

A. Agrawal, et al. VQA: Visual question answering. IJCV, 2017.

M. Arjovsky, et al. Wasserstein generative adversarial networks. ICML, 2017.

N. Ballas, et al. Delving deeper into convolutional networks for learning video representations. ICLR, 2016.

L. Bertinetto, et al. Fully-convolutional siamese networks for object tracking. ECCV Workshop, 2016.

W. Chen, et al. Compressing neural networks with the hashing trick. ICML, 2015.

Y. Cui, et al. Kernel pooling for convolutional neural networks. CVPR, 2017.

M. Danelljan, et al. ECO: Efficient convolution operators for tracking. CVPR, 2017.

E. Denton, et al. Exploiting linear structure within convolutional networks for efficient evaluation. NIPS, 2014.

C. Doersch. Tutorial on variational autoencoders. arXiv: 1606.05908, 2016.

J. Donahue, et al. Long-term recurrent convolutional networks for visual recognition and description. CVPR, 2015.

V. Dumoulin, et al. A learned representation for artistic style. ICLR, 2017.

Y. Gao, et al. Compact bilinear pooling. CVPR, 2016.

LA Gatys, et al. Texture synthesis using convolutional neural networks. NIPS, 2015.

LA Gatys, et al. Image style transfer using convolutional neural networks. CVPR, 2016.

I. Goodfellow, et al. Generative adversarial nets. NIPS, 2014.

I. Goodfellow. NIPS 2016 tutorial: Generative adversarial networks, arXiv: 1701.00160, 2016.

A. Gordo, et al. End-to-end learning of deep visual representations for image retrieval. IJCV, 2017.

S. Han, et al. Learning both weights and connections for efficient neural network. NIPS, 2015.

AG Howard, et al. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv: 1704.04861, 2017.

H. Hu, et al. Network trimming: A data-driven neuron pruning approach towards efficient deep architectures. arXiv: 1607.03250, 2016.

I. Hubara, et al. Binarized neural networks. NIPS, 2016.

A. Jiménez, et al. Class-weighted convolutional features for visual instance search. BMVC, 2017.

Y. Jing, et al. Neural style transfer: A review. arXiv: 1705.04058, 2017.

J. Johnson, et al. Perceptual losses for real-time style transfer and super-resolution. ECCV, 2016.

K. Kafle and C. Kanan. Visual question answering: Datasets, algorithms, and future challenges. CVIU, 2017.

Y. Kalantidis, et al. Cross-dimensional weighting for aggregated deep convolutional features. ECCV, 2016.

A. Karpathy, et al. Large-scale video classification with convolutional neural networks. CVPR, 2014.

A. Karpathy and L. Fei-Fei. Deep visual-semantic alignments for generating image descriptions. CVPR, 2015.

DP Kingma and M. Welling. Auto-encoding variational Bayes. ICLR, 2014.

S. Kong and C. Fowlkes. Low-rank bilinear pooling for fine-grained classification. CVPR, 2017.

A. Krizhevsky, et al. ImageNet classification with deep convolutional neural networks. NIPS, 2012.

T.-Y. Lin, et al. Bilinear convolutional neural networks for fine-grained visual recognition. TPAMI, 2017.

T.-Y. Lin and S. Maji. Improved Bilinear Pooling with CNNs. BMVC, 2017.

J. Liu, et al. Knowing when to look: Adaptive attention via a visual sentinel for image captioning. CVPR, 2017.

W. Lie, et al. Large-margin softmax loss for convolutional neural networks. ICML, 2016.

W. Liu, et al. SphereFace: Deep hypersphere embedding for face recognition. CVPR, 2017.

J. Lu, et al. Hierarchical question-image co-attention for visual question answering. NIPS, 2016.

J.-H. Luo, et al. Image categorization with resource constraints: Introduction, challenges and advances. FCS, 2017.

J.-H. Luo, et al. ThiNet: A filter level pruning method for deep neural network compression. ICCV, 2017.

L. Maaten and G. Hinton. Visualizing data using t-SNE. JMLR, 2008.

A. Mahendran and A. Vedaldi. Understanding deep image representations by inverting them. CVPR, 2015.

X. Mao, et al. Least squares generative adversarial networks. ICCV, 2017.

P. Molchanov, et al. Pruning convolutional neural networks for resource efficient inference. ICLR, 2017.

A. Mordvintsev, et al. Inceptionism: Going deeper into neural networks. Google Research Blog, 2015.

H. Nam, et al. Dual attention networks for multimodal reasoning and matching. CVPR, 2017.

JYH Ng, et al. Beyond short snippets: Deep networks for video classification. CVPR, 2015.

F. Radenović, et al. Fine-tuning CNN image retrieval with no human annotation. arXiv: 1711.02512, 2017.

A. Radford, et al. Unsupervised representation learning with deep convolutional generative adversarial networks. ICLR, 2016.

M. Rastegari, et al. XNOR-Net: ImageNet classification using binary convolutional neural networks. ECCV, 2016.

F. Schroff, et al. FaceNet: A unified embedding for face recognition and clustering. CVPR, 2015.

K. Simonyan, et al. Deep inside convolutional networks: Visualizing image classification models and saliency maps. ICLR Workshop, 2014.

K. Simonyan and A. Zisserman. Two-stream convolutional networks for action recognition in videos. NIPS, 2014.

V. Sindhwani, et al. Structured transforms for small-footprint deep learning. NIPS, 2015.

JT Springenberg, et al. Striving for simplicity: The all convolutional net. ICLR Workshop, 2015.

Y. Taigman, et al. DeepFace: Closing the gap to human-level performance in face verification. CVPR, 2014.

D. Tran, et al. Learning spatiotemporal features with 3D convolutional networks. ICCV, 2015.

A. Nguyen, et al. Synthesizing the preferred inputs for neurons in neural networks via deep generator networks. NIPS, 2016.

D. Ulyanov and A. Vedaldi. Instance normalization: The missing ingredient for fast stylization. arXiv: 1607.08022, 2016.

J. Valmadre, et al. End-to-end representation learning for correlation filter based tracking. CVPR, 2017.

O. Vinyals, et al. Show and tell: A neural image caption generator. CVPR, 2015.

C. Wu, et al. A compact DNN: Approaching GoogleNet-level accuracy of classification and domain adaptation. CVPR, 2017.

J. Wu, et al. Quantized convolutional neural networks for mobile devices. CVPR, 2016.

Z. Wu, et al. Deep learning for video classification and captioning. arXiv: 1609.06782, 2016.

J. Xu, et al. Unsupervised part-based weighting aggregation of deep convolutional features for image retrieval. AAAI, 2018.

K. Xu, et al. Show, attend, and tell: Neural image caption generation with visual attention. ICML, 2015.

J. Yosinski, et al. Understanding neural networks through deep visualization. ICML Workshop, 2015.

Z. Yu, et al. Multi-modal factorized bilinear pooling with co-attention learning for visual question answering. ICCV, 2017.

MD Zeiler and R. Fergus. Visualizing and understanding convolutional networks. ECCV, 2014.

L. Zhang, et al. SIFT meets CNN: A decade survey of instance retrieval. TPAMI, 2017.

Bilge float alarm,Bilge float alarm price,Bottom floating alarm

Taizhou Jiabo Instrument Technology Co., Ltd. , https://www.taizhoujbcbyq.com