The key to deep learning (or most areas of life) is drills. Exercise various problems - from image processing to speech recognition. Each question has its own unique nuances and methods.

But where can I get this data? Many of the research papers you see today use proprietary data sets that are not generally open to the public. And this becomes an obstacle if you learn and apply your newly acquired skills.

If you also encounter this problem, we have solutions available to you. We have selected a series of publicly available data sets for you to read in detail.

In this article, we have listed a series of high-quality data sets, each deep learning enthusiast can apply and improve their skills. Using these data sets will make you a better data scientist, and the knowledge you learn will be invaluable to your career. We also included papers with the latest technology (SOTA) results for you to browse and refine your model.

▌ How to use these data sets

The first thing to do - the capacity of these data sets is quite large! So make sure your network is downloading data at high speed, unlimited traffic, or with a lot of traffic.

There are many ways to use these datasets. You can use them to apply various deep learning skills. You can also use them to hone your skills, understand how to identify and build each problem, think about unique use cases and show everyone your findings so that everyone can see!

These data sets fall into three categories - image processing, natural language processing, and audio/voice processing.

Let us begin to understand more deeply!

Image processing

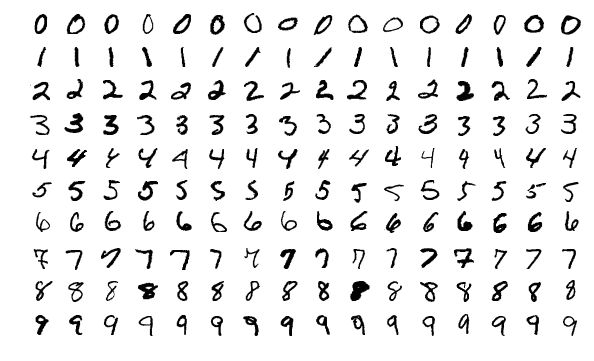

MNIST

MNIST is one of the most popular deep learning data sets. This is a handwritten digital data set containing a set of 60,000 example training sets and a set of 10,000 sample test sets. This is a good database for trying learning techniques and deep recognition patterns in real data, while trying to learn how to spend the least time and effort on data preprocessing.

Size: ~50 MB

Number of records: 70,000 pictures divided into 10 categories

SOTA:Dynamic Routing Between Capsules

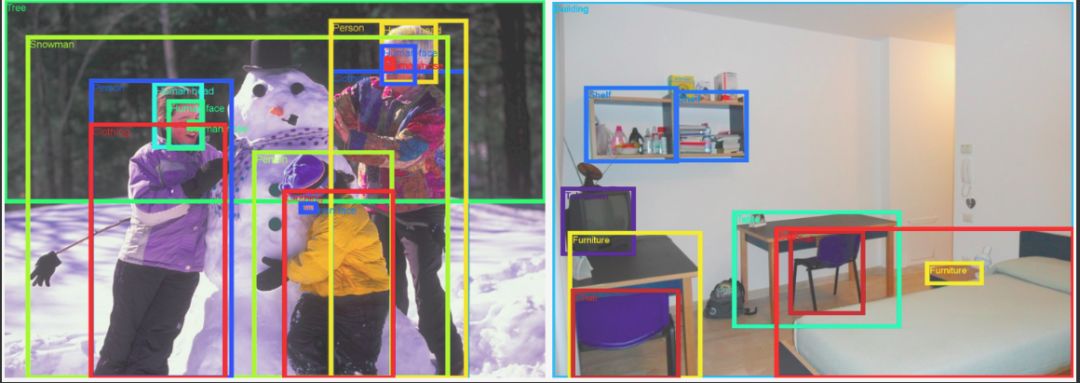

MS-COCO

COCO is a large and rich object detection, segmentation and caption data set. It has several features:

Object segmentation

Identification in the text

Super pixel material segmentation

330K image (> 200K mark)

1.5 million object instances

80 object categories

91 substance categories

5 captions per picture

250,000 people with key points

Size: ~25 GB (compressed)

Number of records: 330K images, 80 object categories, 5 subtitles per image, 250,000 people with key points

SOTA: Mask R-CNN

ImageNet

ImageNet is an image dataset organized according to the WordNet hierarchy. WordNet contains about 100,000 phrases, and ImageNet provides an average of about 1000 images to illustrate each phrase.

Size: ~150GB

Number of records: Total number of images: ~1,500,000; each has multiple bounding boxes and corresponding class labels

SOTA: Aggregated Residual Transformations for Deep Neural Networks

Open Images Dataset

Open Images is a data set containing nearly 9 million image URLs. These images have been annotated with thousands of categories of image-level label borders. The data set contains a training set of 9,011,219 images, a validation set of 41,260 images, and a test set of 125,436 images.

Size: 500 GB (compressed)

Recorded number: 9,011,219 images with more than 5k tags

SOTA: Resnet 101 image classification model (trained on V2 data): Model checkpoint, Checkpoint readme, Inference code.

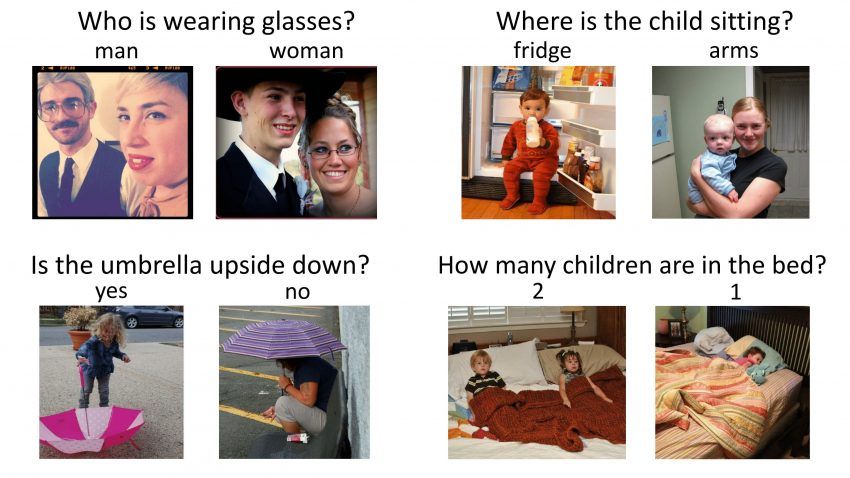

VisualQA

VQA is a data set that contains open questions about images. These questions need to be understood as visual and language. This dataset has some interesting features:

265,016 pictures (COCO and abstract scenes)

At least 3 questions per image (average 5.4 questions)

10 fact-based answers to each question

3 seemingly (but seemingly incorrect) answers to each question

Automatic assessment indicator

Size: 25 GB (compressed)

Number of records: 265,016 pictures, at least 3 questions per picture, 10 questions per question

SOTA:Tips and Tricks for Visual Question Answering: Learnings from the 2017 Challenge

The Street View House Numbers (SVHN)

This is a real-world image data set for developing object detection algorithms. These require only minimal data preprocessing. It is similar to the MNIST data set mentioned in this list but has more tag data (over 600,000 images). The data was collected from the house numbers viewed in Google Street View.

Size: 2.5 GB

Number of records: 6,30,420 of 10 courses

SOTA:Distributional Smoothing With Virtual Adversarial Training

CIFAR-10

This is another data set for image classification. It contains 60 categories of 60,000 images (each class represented as a row in the above figure). There are a total of 50,000 training images and 10,000 test images. The data set is divided into 6 parts - 5 training batches and 1 test batch. There are 10,000 images per batch.

Size: 170 MB

Number of records: 60,000 images in 10 categories

SOT: ShakeDrop regularization

Fashion-MNIST

Fashion-MNIST contains 60,000 training images and 10,000 test images. It is a MNIST-like fashion product database. Developers believe that MNIST has been overused, so they use it as a direct replacement for MNIST. Each picture is displayed in grayscale and associated with 10 categories of tags.

Size: 30 MB

Number of records: 70,000 images in 10 categories

SOTA:Random Erasing Data Augmentation

Natural language processing

IMDB Reviews

This is a movie enthusiast's dream data set. It means binary emotion classification and has more data than any previous dataset in this field. In addition to the training and test evaluation examples, there are more untagged data available. Includes text and pre-processed word bag formats.

Size: 80 MB

Number of records: 25,000 highly differentiated movie reviews for training, 25,000 tests

SOTA: Learning Structured Text Representations

Twenty Newsgroups

As the name implies, this data set contains information about newsgroups. In order to select this data set, 1000 news articles were selected from 20 different newsgroups. These articles have certain characteristics, such as subject lines, signatures, and references.

Size: 20 MB

Number of records: 20,000 messages from 20 newsgroups

DOTA: Very Deep Convolutional Networks for Text Classification

Sentiment140

Sentiment140 is a data set that can be used for sentiment analysis. A popular data set is perfect for starting your NLP journey. Emotions have been previously removed from the data. The final data set has the following six characteristics:

The polarity of the tweet

Tweet ID

Tweet date

problem

Tweet user name

Tweet text

Size: 80 MB (compressed)

Recorded number: 160,000 tweets

SOTA: Assessing State-of-the-Art Sentiment Models on State-of-the-Art Sentiment Datasets

WordNet

As mentioned in the above ImageNet data set, WordNet is a large collection of English synonym. Synonym sets are synonyms that each describe different concepts. WordNet's structure makes it a very useful tool for NLP.

Size: 10 MB

Number of records: 117,000 synonym sets are associated with other synonym sets through a small number of "conceptual relationships."

SOTA: Wordnets: State of the Art and Perspectives

Yelp Reviews

This is an open source data set that Yelp released for learning purposes. It contains millions of user reviews, business attributes, and over 200,000 photos from multiple metropolitan areas. This is a very common global NLP challenge dataset.

Size: 2.66 GB JSON, 2.9 GB SQL and 7.5 GB photos (all compressed)

Number of records: 5,200,000 reviews, 174,000 commercial properties, 200,000 images and 11 metropolitan areas

SOTA:Attentive Convolution

The Wikipedia Corpus

This dataset is a collection of the full text of Wikipedia. It contains nearly 1.9 billion words from more than 4 million articles. What makes this a powerful NLP dataset is that you can search through words, phrases, or parts of the paragraph itself.

Size: 20 MB

Number of records: 4,400,000 articles, 1.9 billion words

SOTA: Breaking The Softmax Bottelneck: A High-Rank RNN ​​language Model

The Blog Authorship Corpus

This data set contains thousands of blogger blog posts collected from blogger.com. Each blog is provided as a separate file. Each blog contains at least 200 commonly used English words.

Size: 300 MB

Recorded number: 681,288 posts, more than 140 million words

SOTA: Character-level and Multi-channel Convolutional Neural Networks for Large-scale Authorization Attribution

Machine Translation of Various Languages

This dataset contains training data in four European languages. The task here is to improve the current translation method. You can participate in any of the following language combinations:

English-Chinese and Chinese-English

English - Czech and Czech - English

English - Estonian and Estonian - English

English - Finnish and Finnish - English

English - German and German - English

English - Kazakh and Kazakh - English

English - Russian and Russian - English

English - Turkish and Turkish - English

Size: ~15 GB

Number of records: approximately 30,000,000 sentences and their translation

SOTA:Attention Is All You Need

▌ Audio/voice processing

Free Spoken Digit Dataset

Another data set created by MNIST in this list! This is created to solve the recognition of spoken digits in audio samples. This is an open source dataset, so hopefully it will continue to grow as people continue to contribute more samples. At present, it contains the following features:

3 speakers

1500 recordings (50 per speaker per number)

English pronunciation

Size: 10 MB

Number of records: 1500 audio samples

SOTA: Raw Waveform-based Audio Classification Using Sample-level CNN Architectures

Free Music Archive (FMA)

FMA is a music analysis data set. The data set includes full length and HQ audio, pre-calculated features, as well as audio tracks and user-level metadata. It is an open source data set for evaluating some tasks in the MIR. The following is a list of dataset csv files and what they contain:

Tracks.csv: 106 metadata per track, such as ID, title, artist, genre, tag, and number of plays.

Genres.csv: All 163 style IDs with their name and origin (used to infer genre levels and top genres).

Features.csv: Common features extracted with librosa.

Echonest.csv: An audio feature provided by Echonest (now Spotify) as a subset of the 13,129 tracks.

Size: ~1000 GB

Number of records: about 100,000 tracks

SOTA: Learning to Recognize Musical Genre from Audio

Ballroom

The data set contains dancing dance audio files. Provides excerpts of some of the many dance style features in real audio format. The following are some of the characteristics of the data set:

Total number of instances: 698

Duration: about 30 seconds

Total duration: about 20940 seconds

Size: 14GB (compressed)

Number of records: about 700 audio samples

SOTA: A Multi-Model Approach To Beat Tracking Considering Heterogeneous Music Styles

Million Song Dataset

Million Song Dataset is a free collection of audio features and metadata for a million contemporary pop music tracks. the purpose is:

Encourage research on algorithms that scale to commercial scale

Provide reference data sets for evaluation studies

As a shortcut for creating large data sets using the API (eg The Echo Nest)

Help new researchers start work in the MIR field

The core of the data set is the feature analysis and metadata of one million songs. This data set does not contain any audio, it is just a derived function. Sample audio can be obtained from services such as 7digital by using the code provided by Columbia University.

Size: 280 GB

Recorded number: PS - It's one million songs!

SOTA: Preliminary Study on a Recommender System for the Million Songs Dataset Challenge

LibriSpeech

This data set is a large corpus of approximately 1000 hours of English speech. The data comes from the audio books of the LibriVox project. They have been split and properly aligned. If you are looking for a starting point, check out the prepared acoustic models trained on kaldi-asr.org and the language models that are suitable for evaluation at http://.

Size: ~60 GB

Recorded number: 1000 hours of speech

SOTA: Letter-Based Speech Recognition with Gated ConvNets

VoxCeleb

VoxCeleb is a large-scale speaker identification data set. It contains about 100,000 words from about 1,251 celebrities from YouTube videos. Most of the data is gender-balanced (male 55%). These celebrities span different accents, occupations and ages. There is no overlap between development and test sets. This is an interesting use case for the independence and recognition of which superstar's audio.

Size: 150 MB

Number of records: 100,000 words of 1,251 celebrities

SOTA: VoxCeleb: a large-scale speaker identification dataset

Analyze the Vidhya practice questions: For your practice, we also provide practical life questions and data sets so that you can actually practice. In this section, we have listed deep learning practices on our DataHack platform.

Twitter Sentiment Analysis

The speech of hate speeches in the form of racism and gender discrimination has become a thorny issue on Twitter, and it is important to separate such tweets from others. In this practical issue, we also provide tweet data for normal and hateful tweets. Your job as a data scientist is to determine which tweets are hate-type tweets and which are not.

Size: 3 MB

Recorded number: 31,962 Tweets

Age Detection of Indian Actors

This is a fascinating challenge for any deep learning enthusiast. The data set contains images of thousands of Indian actors and your task is to determine their age. All images are manually selected and cut from the video frame, which makes the scale, pose, expression, illuminance, age, resolution, occlusion and makeup highly disturbing.

Size: 48 MB (compressed)

Number of records: 19,906 images in training set and 6663 images in test set

SOTA: Hands on with Deep Learning – Solution for Age Detection Practice Problem

Urban Sound Classification

This dataset contains more than 8,000 city sound excerpts from 10 categories. This practical question is to introduce you to audio processing in common classification schemes.

Size: training set - 3 GB (compressed), test set - 2 GB (compressed)

Number of records: sound clips from 8732 cities in 10 categories (<= 4s)

If you know of other open source datasets that can be used to recommend others to begin their journey of deep learning/unstructured datasets, please feel free to recommend them to us and attach the reasons why these datasets should be included.

If the reasons are good, I will list them. We very much welcome you in the comment area to let us know the experience of using these data sets. Finally, I wish you all a happy learning!

Silicone Keypad,Silicone Keyboard,Customized Rubber Keypad,Custom Silicone Keypad

CIXI MEMBRANE SWITCH FACTORY , https://www.cnjunma.com