The world is full of visual information that our eyes cannot see. A study by two MIT computer vision scientists showed that using sophisticated image processing technology, you can "see" information that is not directly visible in the corners, and capture images that are 1000 times darker than ordinary photos.

In 2012, when MIT computer vision scientist Antonio Torralba was on vacation on the Spanish coast, he discovered that the stray shadows on the walls of his hotel room did not seem to be cast by anything.

Torralba realized that the discolored patch on the wall was not a shadow at all, but a faint inverted image of the courtyard outside the window. The window acts as the simplest pinhole camera. The light passes through a small opening to form an inverted image on the other side. On a well-lit wall, these images are almost imperceptible. But what surprised Torralba is that the world is full of visual information that our eyes cannot see.

"These images are invisible to us," he said, "but they always exist around us."

Inspired by this phenomenon, Torralba and his colleague, MIT professor Bill Freeman (Bill Freeman) called it unconscious "accidental cameras": windows, corners, indoor plants, and others Common objects can generate subtle images of the surrounding environment. These images are 1000 times darker than any other images and are usually invisible to the naked eye. "We found a way to extract these images and make them visible," Freeman explained.

In the first paper they collaborated on, Freeman and Torralba showed that using an iPhone to photograph the changes in the light on the wall of the room and image processing, the scene outside the window can be displayed. Last fall, they and their collaborators discovered that by photographing the ground near the corner, they could detect the movement of people on the other side of the corner. This summer, they reproduced three-dimensional images of other parts of the room by photographing indoor plants and then using different shadows cast by the leaves of the plants.

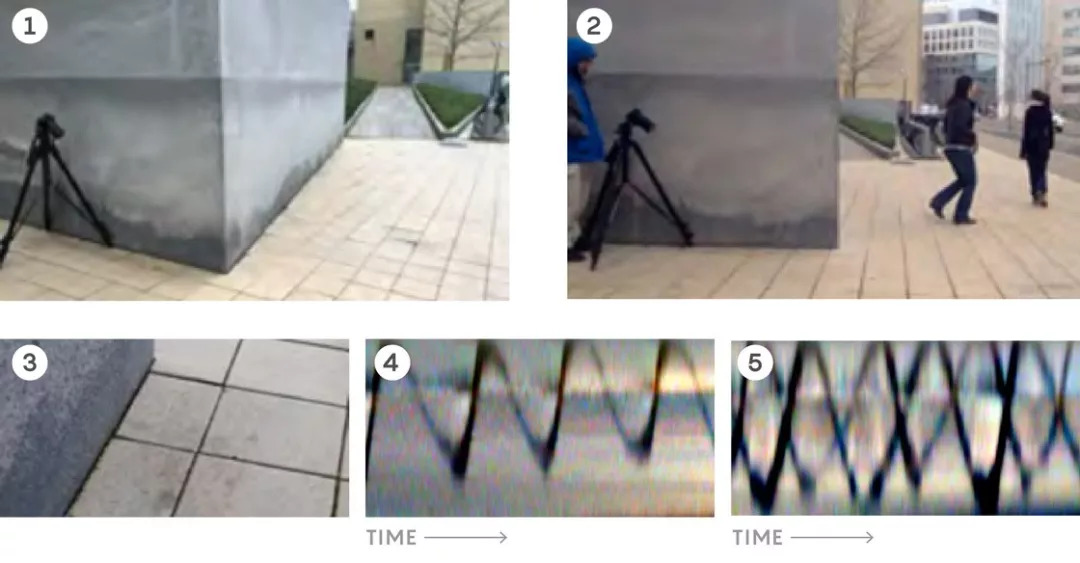

Antonio Torralba noticed that the window of his hotel room was an accidental pinhole camera (1). By covering most of the windows with cardboard to reduce the size of the pinholes, the faint image of the courtyard on the wall (2) can be made clear (3). The inverted image (4) shows the scene outside the window.

In 2012, Torralba and Freeman’s paper on “accidental cameras†began to guide researchers to study surrounding corners and infer information that is not directly visible, called “non-line-of-sight imaging†(non-line-of-sight imaging). ).

In 2016, based on some of the above results, the US Department of Defense Advanced Research Projects Agency (DARPA) launched the $27 million REVEAL program to provide funding for many new laboratories across the country. Since then, a series of new insights and mathematical tools have made non-line-of-sight imaging technology more powerful and practical.

In addition to military and espionage applications, the researchers said that the technology has potential applications in autonomous vehicles, robotic vision, medical imaging, astronomy, space exploration, and search and rescue missions.

Torralba said that when he and Freeman started their research, they had no particular application purpose. They only studied in depth how images are formed and the basic knowledge of camera composition, which naturally leads to a more comprehensive study of the interaction of light with objects and surfaces in the environment. They began to discover things that no one had thought about discovering. Torralba said that psychological research has shown that human interpretation of shadows is very bad. Perhaps one reason is that many of the shadows we see are actually not real shadows.

"Anti-pinhole" perspective camera

The light that carries the image information of the scene outside our field of vision constantly hits walls and other surfaces and is reflected in our eyes. But why can't we see the image? The answer is that too many of these rays travel in too many different directions. The image is greatly diluted.

In order to form an image visible to the human eye, there are great restrictions on the light that hits the target surface, and only a set of specific light rays are allowed to be seen. This is the imaging of a pinhole camera. Torralba and Freeman’s original idea in 2012 was that there are many objects and features in our environment that will naturally limit the light, and can generate weak images that can be detected by a computer.

The smaller the aperture of the pinhole camera, the clearer the image obtained, because each point on the imaging object only emits a single beam of light with the correct angle through the small hole. The windows of the hotel room Torralba stayed in in 2012 were too large to produce clear images. Both he and Freeman knew that, generally speaking, such accidental natural pinhole cameras were rare. However, they realized that a "anti-pinhole" (or "pin") camera composed of any small obstructing object can image the entire area.

Computer vision scientist, MIT professor Bill Freeman (above) and Antonio Torralba (below)

In addition, Freeman and his colleagues also designed algorithms to detect and amplify subtle color changes, such as blood flow on the human face, and microscopic movements. They can now easily differentiate the motion to the one-hundredth of a pixel level, and such subtle motion is generally covered by noise.

Their solution is to mathematically convert the image into a sine wave. It is essential that in the transformation space, the signal is not disturbed by noise, because the sine wave represents the average value of multiple pixels, and the noise propagates in these waves. Therefore, researchers can detect the change in the position of the sine wave from one frame of the video sequence to the next, amplify it, and then convert the data back.

Researchers are now beginning to combine these different techniques to obtain hidden visual information. In October last year, a research report by Freeman's then graduate student Katie Bouman (now at the Harvard-Smithsonian Center for Astrophysics) and others showed that using a corner of a building as a camera can present rough images around the corner.

By shooting shadow penumbra near the corner (1), information about the objects around the corner (2) can be obtained. When the objects in the hidden image area move, the light they cast on the penumbra sweeps different angles relative to the wall. These subtle changes in intensity and color are usually invisible to the naked eye (3), but can be enhanced by algorithms. The original video of light projected from different angles of the penumbra shows one person moving (4) and two people moving in the corner (5).

Like pinholes and pins, edges and corners also restrict the passage of light. Using traditional capture equipment, such as iPhone, Bouman and the company photographed the "penumbra" of the corner of the building: the shadow area is illuminated by a part of the light from the hidden area of ​​the corner. For example, if a person wearing a red shirt walks there, the shirt will project a small amount of red light into the penumbra. When the person walks, the red light will sweep through the penumbra, making it invisible to the naked eye. It becomes clear after processing.

In a groundbreaking work in June, Freeman and his colleagues reconstructed the "light field" in a room: a picture of the intensity and direction of light throughout the room, cast by leafy plants near the wall Rebuilt in the shadows. The leaves act as a "stitch camera", and each leaf blocks different light. Comparing the shadow of each leaf with the rest, you can find a set of missing light, so as to construct a part of the hidden scene. Researchers can piece these images together.

This "light field" method is clearer than the image generated by the early "accidental camera" because the algorithm has input prior knowledge about the outside world: including the shape of indoor plants, the assumption that natural images tend to be smooth, and Other prior knowledge that allows researchers to infer the noise signal, which helps to sharpen the resulting image. Light field technology "needs to have a good understanding of the surrounding environment for image reconstruction, but it can provide a lot of information," Torralba said.

Using scattered light to construct a three-dimensional geometric structure of a hidden target

Although Freeman, Torralba and others have found “invisible images†that have always existed elsewhere on the MIT campus, Ramesh Raskar, a TED computer vision scientist who aims to “change the world,†adopted A method called "active imaging" is used: an expensive professional camera laser system is used to create high-resolution images near corners.

MIT computer vision scientist Ramesh Raskar (Ramesh Raskar) pioneered an active non-line-of-sight imaging technology.

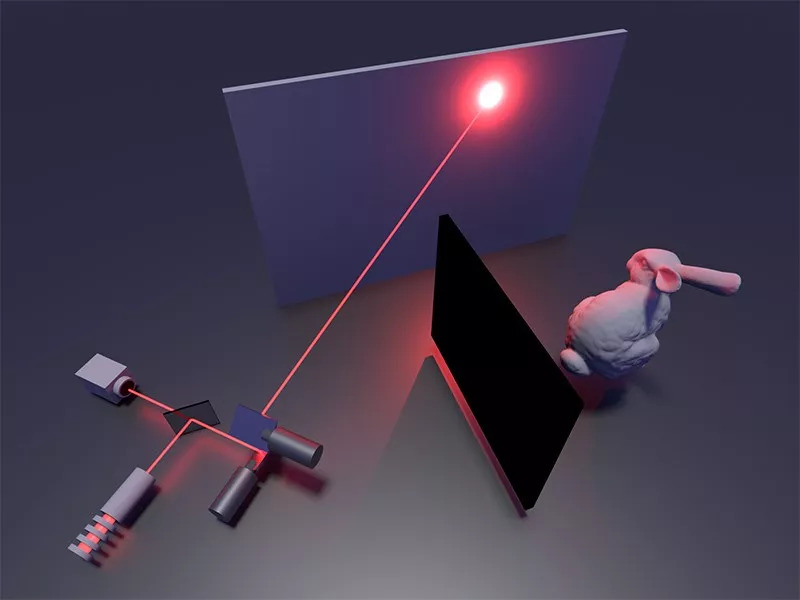

In 2012, Laskar’s team pioneered a technique that fired laser pulses at a wall, causing a small portion of the scattered light to be reflected around the barrier. At the moment after each pulse is emitted, a "streak camera" is used to record the propagation path of a single photon at a rate of billions of frames per second, thereby detecting the photon bounced off the wall. By measuring the flight time of the returning photon, the travel distance of the photon is obtained, thereby reconstructing the detailed three-dimensional geometric structure of the hidden target. A more complicated problem is that a laser must be used to raster scan the wall to form a three-dimensional image. For example, there is a hidden person in the corner.

"So, light from a specific point on the head, a specific point on the shoulder, and a specific point on the knee may all reach the camera at the same time," Laskar said. "But if I fire the laser to a slightly different location, the light from the above three points will not return at the same time." You have to combine all the signals and solve the so-called "inverse problem" to reconstruct the hidden Three-dimensional geometric structure.

Raskar's original algorithm for solving the inverse problem is computationally intensive, and his equipment costs $500,000. However, significant progress has been made in simplifying mathematical models and cutting costs.

The experimental setup of the Laskar team

In active non-line-of-sight imaging, the laser light reflects off the wall, scatters from hidden objects, and then returns to the original launch position. The reflected light can be used to reconstruct the three-dimensional structure of the object

In the past, algorithms often got into trouble due to procedural details: Researchers usually chose to detect photons returning to different positions on the wall, rather than the position where the laser was emitted, so that the camera could avoid the backscattered light of the laser. However, by pointing the laser and the camera at almost the same point, the outgoing and incident photons can be mapped to the same "cone of light."

Whenever light scatters from the surface, it forms an extended photon sphere, and the traces of this sphere appear to be tapered over time. O'Toole of Stanford University and others converted the theory of light cone physics proposed by Albert Einstein's teacher Hermann Minkowski in the early 20th century into the theory of photon flight time and scattering Concise expression of surface position. He called this conversion "light cone conversion".

Self-driving cars have begun to use lidar systems for direct imaging. It is conceivable that they may one day be equipped with "hidden cameras" to observe corners. Andreas Velten, the first author of Raskar's seminal paper in 2012, predicted: "In the near future, these laser-SPAD sensors will be able to appear on handheld devices." The task now is "in more complex scenarios" and reality. To achieve this function in the scene, Velten said, "Instead of having to set a white object and the surrounding black background very carefully, our goal is a point-and-shoot camera."

Future direction: can be used for imaging in military, astronomy and medical industries

One day, non-line-of-sight imaging can help rescue teams, firefighters, and autonomous robots. Velten is working with NASA's Jet Propulsion Laboratory on a project aimed at remotely imaging the interior of the moon cave. At the same time, Raskar and the company have used their method to read the first few pages of the closed book and see through the fog to achieve short-distance perspective.

In addition to the reconstruction of audio files, Freeman's amplification algorithm may also be used in the medical industry, security equipment, or astronomy research that detects small movements. The algorithm "is a very good idea," said David Hogg, an astronomer and data scientist at New York University and the Flatiron Institute. "I think it is necessary to use this technology in astronomy."

When asked about the privacy issues brought about by the application of this technology, Freeman said: "This is indeed a problem. In my career, I think about it a lot," he said. Freeman is a photographer who wears glasses and has been taking pictures since he was a child. He said that when he first started as a photographer, he didn't want to engage in anything related to military or spy applications. But over time, he began to think that "technology is a tool. It can be used in many different ways. If you always try to avoid anything that may have military uses, you will never do anything useful." He said that even for military purposes, the use of this technology is very rich.

However, what excites him is not the technical possibility, but the ability to discover phenomena hidden from ordinary vision. "I think there are many things in the world waiting for us to discover." He said.

1 PCB Screw Terminal Block 2 Plug-in Terminal Block 3 PCB Spring Terminal Block 4 Barrier Terminal Block 5 Feed Through Terminal Block.

Terminal block consists of fixed terminal block (hereinafter referred to as socket) and free terminal block (hereinafter referred to as plug). The socket is fixed on the electric parts through its square (round) plate (welding method is also adopted for some), the plug is generally connected with the cable, and the plug and socket are connected by connecting nuts.

The terminal block consists of three basic units: shell, insulator and contact body.

Strictly speaking, terminal block refers to a solid device composed of an insulating base and more than one live part. Each conductive member can be used as a joint point for connecting two or more conductors (wires), and also as a tool for connecting or not connecting conductors (wires) individually. In the literal sense, the terminal block can be thought of as a wiring platform providing terminal or wire connection. The terminal block can be a combination type or a single-piece design. The combined terminal block is stacked in the way similar to the block combination, and the two ends are added with side covers and locked and fixed with screws. As for the single terminal block, the combination process mentioned above is not necessary, and it can be used alone.

Terminal Block Series

ShenZhen Antenk Electronics Co,Ltd , https://www.antenk.com